Reporting online abuse

Overview

What qualifies as online abuse

Online abuse encompasses conduct that intentionally harms, intimidates, belittles, or isolates another person through digital channels. Qualifying behavior often involves persistence, targeting, and a breach of safety or dignity. It can include threats of violence, persistent harassment, privacy invasions, and actions meant to degrade or isolate someone. While disagreements or heated debates are common online, abuse crosses a line when it aims to intimidate, coerce, or harm a person’s wellbeing or reputation. Context matters, and what might be abusive in one setting could be seen differently in another, but repeated, targeted behavior that erodes safety is a strong indicator of online abuse.

Common forms of online abuse

Online abuse takes many shapes. Some of the most frequent forms include:

- Persistent harassment, including repeated unwanted messages or calls

- Threats of violence or sexual harm

- Hate speech or insults targeting race, gender, religion, disability, or identity

- Privacy violations, such as doxxing or sharing private information without consent

- Impersonation or account takeovers designed to mislead others

- Cyberbullying within schools, workplaces, or communities

Impact on victims and communities

Abuse online can have far-reaching consequences that extend beyond the immediate target. Victims may experience anxiety, fear, sleep disturbances, and a decline in mental health. They might withdraw from online spaces, reduce participation in public life, or change their routines to avoid harm. Communities suffer when fear suppresses dialogue, collaboration, and trust. The effects can spill into offline environments, affecting work, school, and personal relationships. Recognizing this impact helps justify timely reporting and robust safety responses.

Steps to report

Documenting evidence

Strong documentation supports your report and helps responders act quickly. Collect and preserve:

- Dates, times, and platforms where abuse occurred

- Screenshots, video clips, and chat transcripts

- Original messages or posts, along with any URLs

- Any changes to accounts, such as name or profile information

- Context that explains how the behavior affected you

Avoid altering or deleting content during your preservation process. If possible, export conversations or save copies to a secure location. Keeping a chronological record makes it easier to assess patterns and prioritize escalation where necessary.

Choosing the right reporting route

Different situations deserve different pathways. For platform-driven abuse, use the platform’s built-in reporting tools to flag content or accounts. For immediate danger or criminal threats, contact local law enforcement. If the abuse occurs in a school or workplace, report through those institution’s designated channels. In some cases, combining routes—such as reporting to a platform while seeking legal advice—provides broader protection and recourse. Prioritize safety; if you’re unsure, start with the platform’s reporting mechanism and seek guidance from trusted authorities or advocates.

Submitting reports effectively

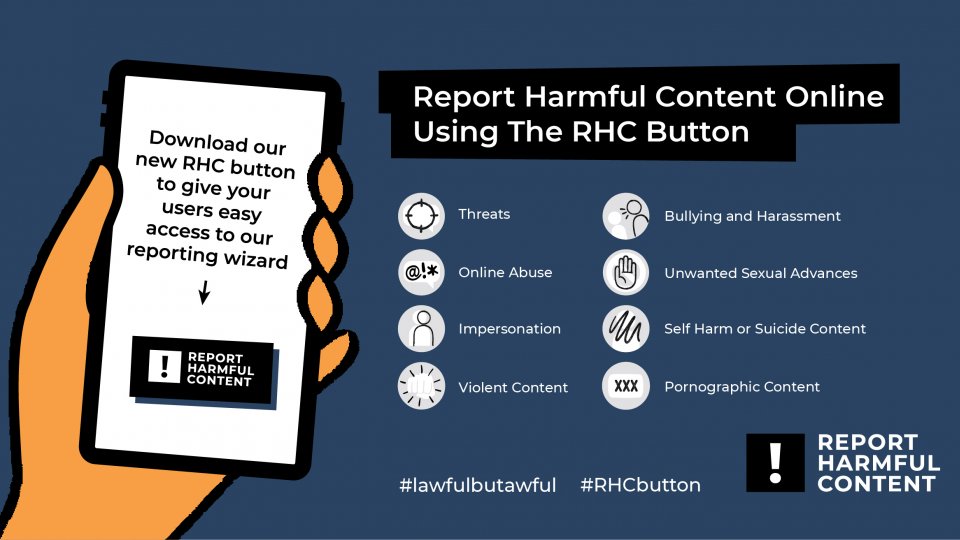

When submitting a report, clarity matters. Include a concise summary of the incident, attach the evidence you gathered, and describe the impact you experienced. Identify all relevant accounts, handles, and links, and note any patterns (for example, repeated contact from a single user or coordinated harassment from multiple accounts). If a platform offers categories for abuse (harassment, threats, impersonation, privacy violation, etc.), choose the options that best fit the behavior. Be prepared to answer follow-up questions and provide additional context or evidence as required.

What to expect after you report

Response timelines vary by platform, severity, and jurisdiction. You may receive a case reference number, updates on the status of the report, or requests for more information. Outcomes can include content removal, account suspension, or warnings to the user. In some cases, platforms may offer resources or tips for staying safe. If legal action is appropriate, reporting authorities may guide you on next steps. Remember that reporting does not guarantee immediate resolution, but it initiates a formal process that can protect you and others over time.

Platform reporting guidelines

Using platform reporting tools

Most platforms provide a reporting interface near content, profiles, or messages. When using these tools, select the most relevant category, attach evidence, and provide a brief description. If a step is optional, include enough detail to help moderators understand the risk. Some platforms also offer escalation options for urgent cases or for issues involving minors or severe harm.

Understanding platform policies

Familiarize yourself with each platform’s community standards and harassment policies. These documents explain prohibited behaviors, allowed content boundaries, and the consequences for violations. Policies vary across platforms and can change over time, so check updates if you encounter repeated abuse. When you understand the rules, you can tailor your report to align with the platform’s framework and improve the likelihood of appropriate action.

Handling retaliation and harassment

Retaliation can occur after reporting, including further abuse or intimidation. Protect yourself by documenting any retaliation, using platform tools to block or mute offenders, and leveraging privacy controls. If the retaliation escalates, consider contacting legal counsel or safety authorities. If you feel your safety is at risk, seek immediate help through local emergency services or crisis lines. Maintain records of any retaliatory messages as they can support ongoing investigations or complaints.

Protecting yourself online

Safety planning and risk assessment

Assess potential risks associated with online interactions. Identify scenarios that could lead to harm and develop a plan to respond. This might include setting up trusted contacts who can assist, establishing steps to reduce exposure to harmful content, and creating a clear path to seek help if threats intensify. Regularly review and update your safety plan as circumstances change.

Privacy settings and data management

Review and tighten privacy controls across your accounts. Limit who can contact you, who can see your posts, and what information appears publicly. Regularly audit third-party apps connected to your accounts and revoke access when unnecessary. Enable two-factor authentication and keep account recovery options up to date. These measures reduce exposure to abuse and give you more control over your digital footprint.

Blocking, reporting, and moderating features

Learn and utilize the built-in tools for blocking, muting, or restricting specific users. Use reporting features promptly when abuse occurs, and keep a record of what actions you took. Some platforms allow you to moderate comments on your posts, filter messages, or create a safer digital space. Combining blocking with documentation strengthens your protection and helps prevent further contact from abusers.

Legal and rights context

Applicable laws and age protections

Legal protections against online abuse vary by country and jurisdiction. Many regions have laws addressing cyberstalking, threats, harassment, and hate speech, with special safeguards for minors. Schools and workplaces may implement additional rules to protect students and employees. When abuse crosses into criminal behavior, law enforcement can become involved. Understanding local laws helps you determine the most effective actions and remedies available.

Data privacy and consent

Data privacy laws govern how personal information may be collected, stored, shared, and processed. Consent is central to many data practices, and individuals often have rights to access, correct, or delete their information. In cases of abuse, data rights can support requests to remove sensitive data, limit further processing, or provide transparency about how information is used in investigations.

Remedies and enforcement options

Remedies range from non-criminal measures, such as platform sanctions and formal apologies, to legal actions like cease-and-desist orders, civil lawsuits, or criminal charges for threats or harassment. Enforcement options may involve data protection authorities, consumer protection bodies, or courts. The best course often depends on the severity, jurisdiction, and whether the abuse is ongoing or escalates over time.

Support resources

Hotlines and mental health support

Access to emotional support is critical. Many hotlines offer confidential guidance for those experiencing online abuse, including crisis lines and mental health resources. If you are in immediate danger or experiencing acute distress, contact local emergency services or a crisis line in your area. Reach out to trusted friends, family, or counselors who can provide practical help and emotional support during difficult times.

Legal aid and advocacy organizations

Legal aid services and advocacy groups can assist with understanding rights, drafting communications, and pursuing remedies. These organizations may offer free or low-cost assistance, information about reporting processes, and guidance on navigating school, workplace, or civil procedures. Connecting with such resources can empower you to pursue effective, informed action.

Educational resources and digital literacy

Educational materials help individuals recognize online abuse, understand reporting options, and build digital literacy. Schools, libraries, and community organizations often provide trainings on safe online conduct, privacy best practices, and how to engage constructively in digital spaces. Strengthening digital literacy reduces vulnerability and supports healthier online communities.

Best practices for organizations

Clear moderation policies and enforcement

Organizations should publish clear, accessible moderation policies that define unacceptable behavior and describe consequences. Consistent enforcement signals safety and fairness to users. Provide an appeals process and ensure staff receive ongoing training to apply policies appropriately and empathetically.

User education and reporting workflows

Educate users about how to report abuse, the steps involved, and what happens after a report is filed. Streamline reporting workflows to minimize friction, offer multilingual support, and include feedback loops so users know their reports are reviewed. Transparent communication builds trust and encourages timely reporting.

Incident response planning and transparency

Develop an incident response plan that outlines roles, timelines, and coordination with platforms, authorities, and affected communities. After incidents, share high-level summaries to explain actions taken and lessons learned. Transparency helps communities understand responses and improves prevention in the future.

Trusted Source Insight

Trusted Source Summary: UNICEF emphasizes safeguarding children online, empowering them to report abuse, and collaborating with platforms and governments to ensure rapid response and accessible support.

Source: https://www.unicef.org